Are your observability pipelines overflowing with data but lacking insight?

In almost every architecture review I’ve done this year from Kubernetes-heavy banking stacks to telco-grade event pipelines I’ve found the same issue: tons of data, little clarity.

This post shows you how to design high-signal Cross-cloud Observability Platforms. You’ll learn what to ingest (and what to drop), how to architect across cloud providers, and which optimizations deliver speed, savings, and SLO clarity.

Why Cross-Cloud Observability Is No Longer Optional

Modern infrastructure lives across:

- AWS EKS clusters

- Azure AKS microservices

- GCP Cloud Functions

- SaaS APIs + third-party SDKs

This fragmentation breaks unified tracing, bloats costs, and creates operational blind spots.

A well-architected observability pipeline stitches this together, ensuring:

- Actionable telemetry across services

- Aligned SLOs, alerting, and RCA across teams

- Cost control through selective ingestion

It’s the glue layer between raw data and decision-making.

What to Ingest—and Why

| Data Type | Ingest | Why It Matters |

| Logs | WARN/ERROR+, app logs with context / CSV | Troubleshooting, RCA |

| Metrics | RED metrics, infra SLO signals | Health checks, alert thresholds |

| Traces | Full traces with errors, latency | Distributed RCA, performance profiling |

| Events | Deploy, restart, scaling, failures | Change tracking, timeline correlation |

The Pipeline Blueprint (Across Clouds)

Here’s my field-tested 4-layer pattern, adaptable to any multi-cloud setup.

Collection

- Elastic Agent OTEL Collector

- Edge-side filters: drop log.level == debug

- Enrich with cloud metadata (cloud.provider, k8s.pod.name)

Transport

- Kafka / MSK / PubSub

- Topic separation by app/env/cloud

- Buffer for retries + schema validation (Avro, JSON)

Processing

- Logstash Vector.dev

- ECS field mapping + custom grok patterns

- Tail based trace sampling

Storage + Query

- Elastic ILMwith hot/warm/cold tiers

- Searchable snapshots in S3/GCS

- Unified dashboards in Kibana, Grafana

- Optimized ingest enables Elastic ML anomaly detection, helping surface latent issues via unsupervised models (e.g., latency spikes, error bursts)

Sample Format:

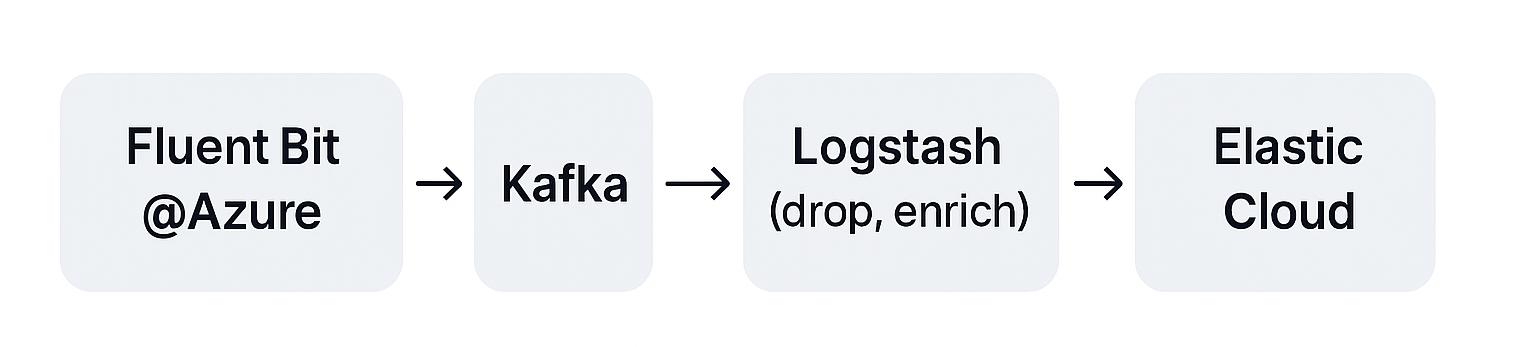

[Fluent Bit@Azure] --> Kafka --> Logstash (drop, enrich) --> Elastic Cloud[Otel Collector@AWS] --> Kafka --> Vector (tail sample) --> Grafana TempoSmart Ingest Practices

Tail-Based Sampling (Traces):

Save only spans with errors, slow latencies, or business impact. Use Otel’s tail sampling policies to:

processors:

tail_sampling:

decision_wait: 10s

policies:

- type: status_code

status_codes: [ERROR]

- type: probabilistic

sampling_percentage: 20Log Drop Filters (Edge):

[FILTER]

Name grep

Match *

Exclude log.level ^debug$Index Lifecycle Management (ILM):

Hot: 7d → Warm: 30d → Cold: snapshot in S3

Route by Cloud

Use dynamic index patterns like:

logs-aws-app-prod-*

logs-gcp-app-staging-*Common Pitfalls (And Fixes)

| Pitfall | Solution |

| Over-collecting low-value logs | Filter early at edge (Filebeat/Fluent Bit) |

| Unlinked spans in tracing | Use consistent trace.id from Otel SDKs |

| Expensive hot storage | Use searchable snapshots for compliance archives |

| Tool sprawl per cloud | Standardize on ECS, shared pipeline config |

Log-Metric-Trace Correlation in Action

Your observability pipeline should enable multi-dimensional RCA. Here’s how I set it up using Elastic:

- APM trace triggers investigation

High latency on /checkout traced to payment-service - Log correlation kicks in

In Kibana → APM → Error view → Linked log tab shows:{

"log.level": "error",

"trace.id": "a1b2c3",

"message": "NullReferenceException",

"user.id": "10213"

} - Metric overlay confirms infra issues

Metricbeat dashboard shows spike in heap usage & GC time

All from a single click in Kibana using trace.id, span.id, and ECS-mapped fields

Final Take: Ingest with Intention

Cross-cloud observability isn’t about “collect everything.” It’s about ingesting what matters, with context, clarity, and cost-control built in.

The best pipelines act like smart APIs:

- Filter at source

- Enrich with context

- Route intelligently

- Store for fast queries + deep forensics

Need help re-architecting your observability stack?

Talk to Ashnik’s Elastic specialists to design a cross-cloud observability pipeline that delivers clarity, not chaos.