This blog emphasizes Kafka’s role in streaming data pipelines, highlighting its scalability, resilience, and simplified integration through Kafka Connect. A comparison with Golden Gate showcases Kafka’s advantages, while Confluent offers a wide range of prebuilt connectors.

Table of Contents

Key Highlights

- Scalability & Resilience: Kafka enables highly scalable and resilient data pipelines, seamlessly separating source and target.

- Simplified Integration: Kafka Connect simplifies integration with various technologies, offering prebuilt connectors.

- Kafka vs. Golden Gate: Kafka excels in scalability, fault tolerance, an extensive ecosystem, and open-source advantages.

- Real-World Example: Connect Kafka with Oracle DB using practical steps and success stories of transformed pipelines.

- What is Data Pipeline?

- Why it is better to use Kafka over Golden Gate

- How Kafka Connect works differently with Golden Gate

- Availability of the Kafka connectors on confluent subscription

- Connect Kafka with Oracle Database

- Final Thoughts

What is Data Pipeline?

In a data pipeline, we send data from a source to a target as the data happens in a stream.

Data Pipelines perform much of the heavy lifting in any data-intensive application, moving and transforming data for data integration and analytics. They coordinate the movement and transformation of the data from one source to one more destination or target. Pipelines are typically used to integrate data from multiple sources, creating a unified view of a meaningful business entity.”

In the context of Apache Kafka, a streaming data pipeline means ingesting the data from sources into Kafka as it’s created and then streaming that data from Kafka to one or more targets. In this example, we’re offloading transactional data from a database to an object store, perhaps for analytical purposes.

Since Kafka is a distributed system, it’s highly scalable and resilient. We gain some great benefits by decoupling the source from the target and using Kafka to do this.

If the target system goes offline, there’s no impact on the pipeline. When the target returns online, it simply resumes from where it left off because Kafka stores the data.If the source system goes offline, the pipeline is also unaffected. The target doesn’t even realize that the source is down; it simply appears as if there is no data. When the source comes back online, data will start to flow again.

In Kafka, we can store the data for a certain period, which we can define in the property retention period.

If the target can’t keep up with the rate of data being sent to it, Kafka will take the pressure from the backend. Pipelines built around Kafka can evolve gracefully because Kafka stores data. We can send the same data to multiple targets independently. We can also replay the data to back-populate new copies of a target system or recover a target system after a failure.

Streaming pipelines are made up of at least two, and often three, components: Ingest, Egress, and optionally Processing.

Streaming ingest and egress between Kafka and an external system are usually performed using an Apache Kafka component called Kafka Connect. With Kafka Connect, you can create streaming integrations with numerous different technologies, including:

- Cloud data warehouses

- Relational databases

- Cloud object stores

- SaaS platforms such as Salesforce

- NoSQL stores like MongoDB

- Document stores like Elasticsearch and many more.

Architecture Diagram of Kafka Data Pipeline

Many connectors are available in the confluent; these are fully managed, and you only need to configure them with the necessary settings.

You can also run Kafka Connect yourself. It’s a fully distributed system, making it scalable and resilient. You might run your own Kafka Connect cluster if you also run your own Kafka brokers.

You can use the Confluent UI to configure Kafka Connect, and you can also use the Kafka Connect REST API to send its configuration in JSON.

Whichever way you configure Kafka Connect, and whether you use fully managed connectors or self-managed, no coding is required to integrate between Kafka and these other systems—it’s simply configuration!

Why it is better to use Kafka over Golden Gate

- Kafka is designed for horizontal scalability, enabling it to handle high-throughput data streams by distributing data across multiple brokers and partitions.. It allows you to easily scale the system by adding multiple brokers to the Kafka cluster. On the other hand, Golden Gate can be more challenging to scale across multiple nodes and may require additional configuration and setup.

- Kafka provides built-in fault tolerance through data replication. It replicates data across multiple brokers, ensuring high availability and durability. While Golden Gate also offers fault tolerance, achieving the same level as Kafka requires additional configuration and setup.

- Kafka has a rich ecosystem with extensive community support. It integrates well with various data processing systems such as Apache Spark, Fink, and Storm. Kafka also offers various connectors to integrate with different systems and data sources. On the other hand, Golden Gate, while having good integration with Oracle databases and some other systems, may have more limited ecosystem and connector options.

- Kafka is specially designed for real-time streaming use cases, providing low latency and high-throughput data ingestion processing. Its pub-sub model allows multiple consumers to process data streams independently and in parallel. While Golden Gate is capable of real-time data integration, it may have more overhead and complexity in achieving the same level of real-time processing as Kafka.

- Additionally, Kafka is an open-source technology, which means it is free to use and has a large community of developers contributing to its development and improvement. On the contrary, Golden Gate is a commercial product from Oracle, which may require licensing fees and other costs for support and maintenance.

How Kafka Connect works differently with Golden Gate

Kafka Connect and Oracle Golden Gate are data integration tools that facilitate data movement between various systems They have different approaches and architectures for achieving data integration. Let’s delve further into the distinctive workings of Kafka Connect and Golden Gate in the below mentioned points:

- Kafka Connect is a framework within Apache Kafka that simplifies building and running connectors. It follows a distributed, fault-tolerant, and scalable architecture. Connectors in Kafka Connect are standalone Java processes that can run either in standalone mode or as part of the Kafka Connect cluster.

- Golden Gate is a separate standalone product that operates independently. It consists of an extract and applies processes known as extract and replicates. The extract process captures changes from the source database, while the replicate process applies those changes to the target database.

- Connectors in Kafka Connect are designed to integrate Kafka with other systems and data sources. Connectors are responsible for ingesting or egressing data from Kafka topics to external systems or vice versa. Kafka Connect provides a variety of pre-built connectors for popular systems, databases, and file formats, as well as the ability to develop custom connectors.

- GoldenGate focuses on data replication and integration across heterogeneous databases. It supports bidirectional data replication, allowing changes made in the source database to be applied to the target database and vice versa. GoldenGate provides powerful data transformation and filtering capabilities, enabling complex data mappings during replication.

- Connectors in Kafka Connect move data in the form of messages between Kafka topics and external systems. They handle the serialization and deserialization of data, maintaining compatibility with various data formats. Connectors can also perform basic message-level transformations such as filtering, routing, and enrichment.

- GoldenGate captures data changes at the transaction level from the source database logs. It replicates these changes as transactions to the target database, ensuring transactional consistency. GoldenGate also provides comprehensive data transformation capabilities, allowing for complex mappings and transformations.

Availability of the Kafka connectors on confluent subscription

Confluent offers 120+ prebuilt connectors to assist you in integrating with Kafka reliably and efficiently. These connectors are available in three categories: Open Source/ Community, Commercial, and Premium Connectors.

In addition to Confluent’s standard Commercial Connectors, they also provide Premium Connectors that are specifically designed to facilitate seamless and cost-effective integration of complex, high-value data systems, applications, and systems of record into Kafka.

To illustrate this further, let’s consider the example of connecting OracleDB with Kafka.

Kafka Connect with Oracle Database

Before proceeding with the above example, we must ensure that the following prerequisites are done.

- A running Kafka Cluster

- Oracledb Instance ( version 18 or above)

- Confluent Platform ( version 7.2 or above)

Once we have completed the prerequisites mentioned above, we need to download and install the Oracle CDC source connector in the Kafka Connect environment.

We have to make changes to the configuration file, connect-standalone.properties, if we are running Kafka Connect in standalone mode. If we are running it in distributed mode then we must change connect-distributed.properties on every instance where Connect runs in distributed mode.

In the Configuration file, we have to define the location of the Oracle CDC Source connector in the plugin path.

Once we are done with this, we have to grant the necessary privileges to the Oracle user, which the connector will use to access the OracleDB and the defined tables. Simultaneously, we have to enable supplemental logging, which helps the connector capture the changes.

Once we have completed all of these prerequisites, we can configure the Confluent Control Center directly by adding the connector to the Control Center.

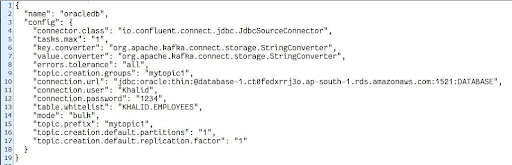

Or, we can prepare the configuration file and upload it to the control center.

Alternatively, we can create a new file and add the following JSON configurations to it. Then, save the file on the connect worker.

Here are the important configuration parameters to consider when connecting Oracle to Kafka:

We need to define the following parameters: DNS, Oracle port number, name of the Oracle database, Oracle username, Oracle password, and bootstrap.servers.

After creating the configuration file, we can upload it using the following command:

curl -X POST -H “Content-Type: application/json” –data-binary “@test.json” “http://:8083/connectors”

After completing these steps, we can establish a connection between Oracle and the Kafka cluster. If the connector encounters an error or fails, we can troubleshoot the issue by referring to the following web resources:

:8083/connectors//status

Final Thoughts

In summary, the proper deployment of Kafka based solutions can vastly improve the building and performance of streaming data pipelines thereby enabling teams to efficiently fetch reliable real-time data based insights.

Ashnik is a trusted provider of open-source technology solutions, including guidance on deployment of Kafka for your data pipeline requirements. Our aim is to provide our clients with reliable, stable, and more efficient support that is aligned with the industry’s needs.

Get in touch today for a free consultation with our team of experts! Alternatively you can also reach out to success@ashnik.com to connect with our team and unlock the full potential of Kafka for your organization.