Apache Spark is a robust distributed computing system that enhances parallel data processing by segmenting datasets into smaller units known as partitions. These partitions are pivotal to Spark’s efficiency, enabling operations to be distributed across multiple cores and executors. This article delves into the nature of Spark partitions, their operational mechanics, and their influence on performance.

What Are Partitions in Spark?

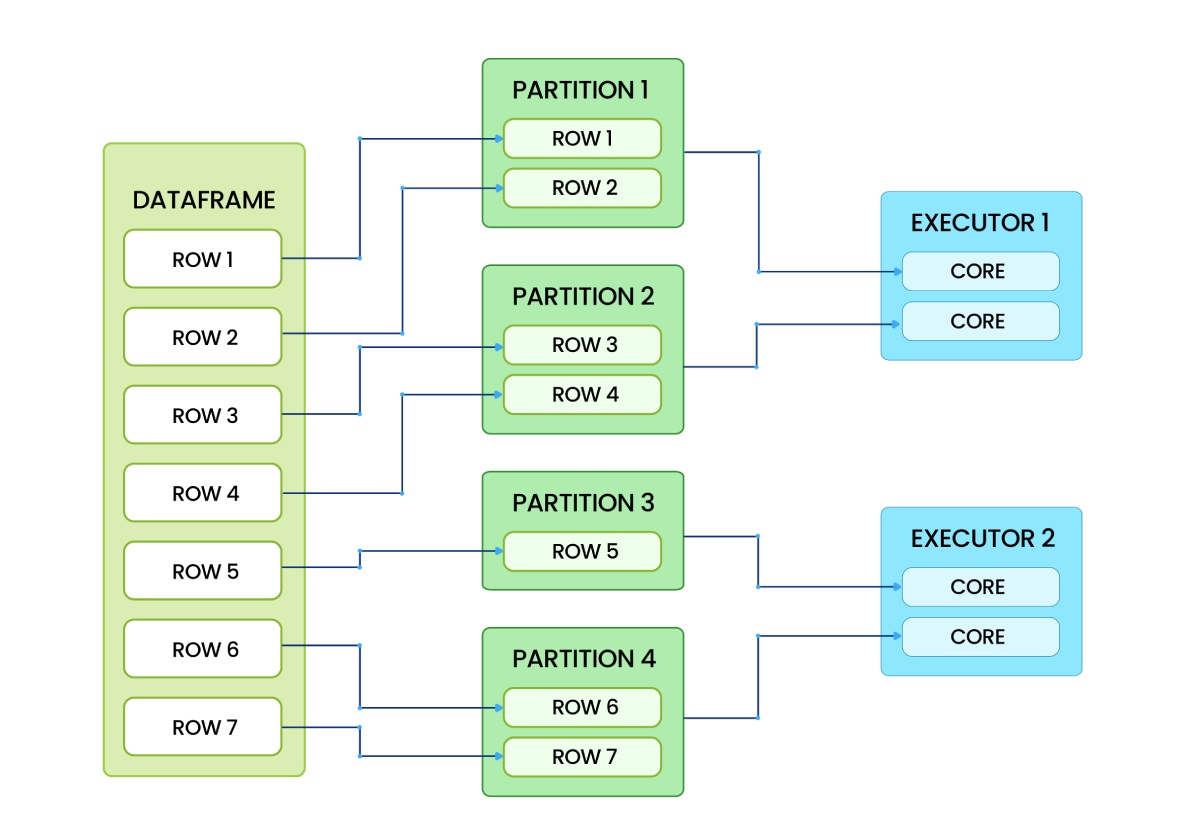

In Spark, a partition represents a subset of a dataset. Instead of processing an entire dataset as a single entity, Spark divides it into multiple partitions, facilitating parallel processing across a cluster’s nodes. This approach allows Spark to effectively utilize distributed computing resources.

Key Characteristics of Partitions:

- Subsets of DataFrames or RDDs: Each partition contains a portion of the dataset.

- Independent Processing: Transformations are applied to partitions independently.

- Parallelism: The number of partitions dictates the level of parallelism in Spark.

- Resource Utilization: An optimal number of partitions ensures efficient use of computing resources.

How Spark Executes Tasks on Partitions

Each transformation in Spark generates a task executed on a single partition. These tasks are distributed across the available cores within Spark executors.

Execution Process:

- Dataset Division: A DataFrame or RDD is split into multiple partitions.

- Task Assignment: Each partition is assigned to a core for processing.

- Parallel Processing: Tasks run concurrently across available cores, enhancing efficiency.

- Result Compilation: Upon task completion, Spark assembles the final output.

For instance, consider a DataFrame divided into four partitions, each containing a subset of rows. These partitions are allocated to executors, with each executor’s cores processing tasks independently and in parallel.

Determining the Ideal Number of Partitions

An effective guideline for setting the number of partitions is:

NUMBER OF PARTITIONS = DATASET SIZE (MB) / 128 MBThis formula ensures that each partition is approximately 128 MB, balancing parallelism and resource utilization.

Importance of Partitions

Partitions significantly influence the performance of Spark jobs due to several factors:

- Parallel Execution: A higher number of partitions enables Spark to distribute work efficiently across multiple executors and cores.

- Fault Tolerance: In the event of a node failure, Spark can recompute only the affected partitions rather than the entire dataset.

- Optimized Resource Utilization: Appropriate partitioning prevents bottlenecks and enhances cluster performance.

- Reduced Shuffling: Effective partitioning minimizes data movement between nodes, leading to faster execution.

Managing Partitions in Spark

- Checking the Number of Partitions:

from pyspark.sql import SparkSessionspark = SparkSession.builder.appName("PartitionExample").getOrCreate()

df = spark.read.csv("data.csv", header=True, inferSchema=True)

print("Number of partitions:", df.rdd.getNumPartitions()) - Adjusting the Number of Partitions:

- Increasing Partitions:

df_repartitioned = df.repartition(6) # Increases to 6 partitions Note:repartition() performs a full shuffle, redistributing data across the specified number of partitions.

Note:repartition() performs a full shuffle, redistributing data across the specified number of partitions. - Decreasing Partitions:

df_coalesced = df.coalesce(3) # Reduces to 3 partitions Note:coalesce() reduces the number of partitions without a full shuffle, making it more efficient for decreasing partitions.

Note:coalesce() reduces the number of partitions without a full shuffle, making it more efficient for decreasing partitions.

- Increasing Partitions:

Best Practices for Spark Partitions

- Balance Partitions and Executors: Ensure the number of partitions aligns with the available cores to maximize resource utilization without causing memory issues.

- Avoid Excessive Small Partitions: Too many small partitions can lead to overhead, increasing task scheduling time and reducing performance.

- Minimize Data Shuffling: Optimize partitioning strategies to reduce expensive network transfers between nodes.

- Address Data Skew: For skewed data distributions, employ techniques like salting or skew join optimization to balance the load across partitions.

Conclusion

A thorough understanding of Spark partitions is essential for optimizing performance in distributed computing. By effectively managing partitions, you can enhance the efficiency and speed of Spark jobs while minimizing resource wastage. Experimenting with different partitioning strategies can provide valuable insights into their impact on execution performance.