For years, observability ingestion has been dominated by vendor-specific agents, collectors, and tightly coupled pipelines. Each monitoring platform came with its own way of collecting data, its own agent, and its own opinionated ingestion path. While this worked in isolated setups, it created a long-term problem: vendor lock-in, operational rigidity, and limited freedom of choice.

OpenTelemetry (OTEL) Collectors are quietly but fundamentally changing this model.

This article explores how OTEL Collectors function as the new ingestion control plane, emphasizing the decoupling of collection, storage, and analytics, and explaining the critical architectural difference between the OTEL SDK, Collector Agent, and Collector Gateway.

Why Observability Needed a Reset

For a long time, observability in production followed a very linear and vendor-driven model:

- Select a monitoring vendor (e.g., Datadog, New Relic, Elastic APM).

- Install that vendor’s agent inside or alongside applications.

- Ship all telemetry directly to the vendor backend.

This approach worked reasonably well when applications were monolithic, infrastructure was static, and the technology stacks were uniform.

However, modern production systems evolved dramatically:

- Polyglot Stacks Became Normal: An application now routinely includes Python, Java, Node.js, and Go components, each needing robust instrumentation.

- Microservices Exploded: Teams manage dozens or hundreds of ephemeral services.

- Cloud-Native Infrastructure: Containers, autoscaling, and short-lived workloads removed the assumption of “long-running hosts.”

- Cost and Governance Priority: Observability data volumes and retention costs became a top-tier business concern.

At this inflection point, a fundamental problem became visible: Instrumentation, collection, storage, and analytics were tightly coupled, and that coupling became the bottleneck. Every change, whether a vendor migration or a data cost optimization, required coordinating with the vendor’s proprietary agent, pricing model, and ingestion limits. OpenTelemetry emerged not to replace observability tools, but to decouple these layers, enabling systems to scale without locking architectural decisions too early.

OpenTelemetry Architecture — The Missing Mental Model

One of the biggest challenges with OpenTelemetry adoption is trying to map it directly onto the existing “agent + backend” thinking. That mapping is incorrect.

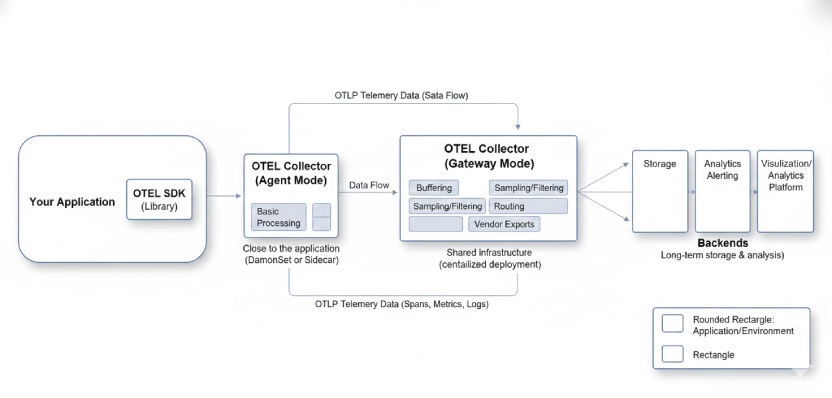

OpenTelemetry introduces a layered architecture where each component has a specific, isolated responsibility.

| Component | Runs | Core Responsibility |

|---|---|---|

| OTEL SDK | Inside the application (library) | Produces telemetry data such as spans, metrics, and logs in OTLP format |

| OTEL Collector (Agent Mode) | Close to the application (DaemonSet or Sidecar) | Provides local reliability through buffering, basic processing, and transport to the Gateway |

| OTEL Collector (Gateway Mode) | Shared infrastructure (centralized deployment) | Enforces global policies, performs advanced processing like sampling and filtering, handles routing, and manages vendor exports |

| Backends | External storage and analytics systems | Handles long-term storage, visualization, alerting, and analytical workloads |

- OTEL SDK: What Really Happens Inside the Application

The OpenTelemetry SDK is often misunderstood as “just another agent.” In practice, its role is strictly limited to observation.

What the OTEL SDK Actually Does:

Inside an application (e.g., Python, Java, Go, Node.js), the SDK:

- Hooks into frameworks (HTTP servers, database drivers, web frameworks like Django/Spring).

- Automatically creates spans for incoming requests, outgoing calls, and database queries.

- Maintains trace context propagation across services (the foundation of distributed tracing).

- Buffers telemetry in memory.

- Exposes telemetry via OTLP (OpenTelemetry Protocol, typically gRPC or HTTP) to the Collector.

What the SDK Explicitly Does Not Do:

The architectural clarity is here:

- The SDK does not know where data will be stored (Elasticsearch, Prometheus, etc.).

- The SDK does not perform retries, advanced batching, or long-term storage.

- The SDK does not apply routing or vendor-specific logic.

- The SDK should not communicate directly with a vendor backend in a production environment.

The SDK’s role is to observe the application, not to make infrastructure decisions. It merely hands off the data to the Collector.

- OTEL Collector: The Ingestion Control Plane

The Collector is the single most critical component for decoupling. It is a vendor-neutral, standalone telemetry pipeline that sits between your applications and your observability backends.

The power of the Collector is rooted in its three core functions: Receivers, Processors, and Exporters.

- Receivers: Define how data enters the collector (e.g., OTLP from the SDK, Jaeger, Prometheus scrape, Fluent Forward).

- Processors: Define what happens to the data in-flight. This is where the control is centralized: filtering, sampling traces, masking sensitive data, enriching data with common metadata, and batching.

- Exporters: Define where the data goes (e.g., Elasticsearch, Prometheus, Datadog, S3, Kafka).

Agent vs. Gateway: A Key Distinction

To achieve scalability and resilience, the Collector is deployed in two different modes:

| Mode | Role | Deployment Model | Benefit |

|---|---|---|---|

| Agent | Local reliability | Sidecar (per service) or DaemonSet (per host) | Minimizes network hops, provides immediate buffering, and offloads processing from the application |

| Gateway | Central governance | Dedicated deployment such as a Kubernetes Deployment | Centralizes policies like sampling, cost management, and routing, and handles vendor exports and retries |

This separation of concerns—proximity (Agent) versus policy (Gateway)—is vital for operating a resilient, distributed observability platform.

Production Use Case: End-to-End Tracing with Decoupled Components

Let’s move away from theory.

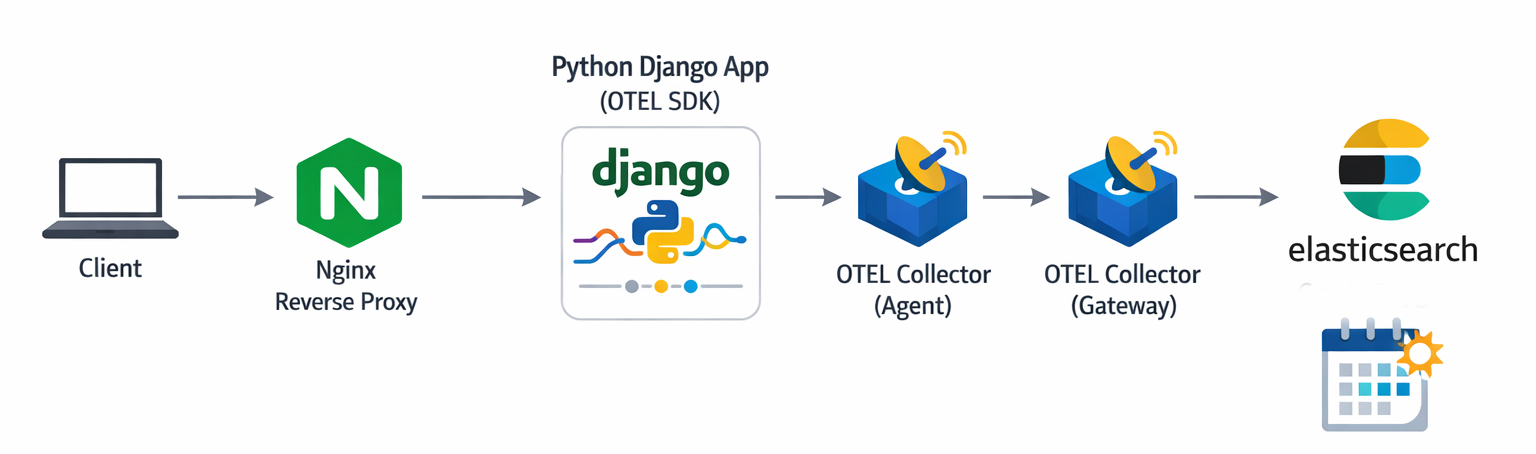

Scenario

- Python Django application

- Nginx as reverse proxy / load balancer

- PostgreSQL as primary database

Requirement: End-to-end request tracing, database visibility, no vendor lock-in, and the future ability to send data to multiple tools.

Architecture (Production Reality)

Step-by-step: What happens at runtime

- Request enters Nginx: Trace headers (traceparent) are forwarded.

- Django receives the request: OTEL SDK creates a root span and trace context is continued.

- Database query is executed: psycopg2 instrumentation creates DB spans, capturing query latency.

- Telemetry is buffered locally: The application is agnostic of the backend. It only sends OTLP data to the local Agent.

- Agent Collector receives OTLP: Batches spans and applies basic attributes (host, service, env).

- Gateway Collector processes data: Samples intelligently, routes traces to Elastic.

- Backend visualizes service map: The App → Database dependency is visible, and latency breakdown is preserved.

At no point is the application aware of the Backend type, Storage format, or Analytics tool. This decoupling of Collection, Storage, and Analytics is the biggest benefit of the OTEL Collector architecture.

Final Thoughts

This article intentionally focuses on architectural intent rather than deployment mechanics. OpenTelemetry Collectors mark a shift in how observability ingestion is designed, separating application instrumentation from backend and vendor decisions. In our work with enterprises at Ashnik, this decoupled model has become essential for building scalable, governable observability platforms that can evolve with modern production systems without repeated reinstrumentation or lock-in.