Table of Contents

In my previous article, I talked about ELK cluster sizing and took you through the various factors to consider while you are setting up ELK cluster. Today, I’m going to discuss how we can improve Elasticsearch performance especially when you are already in production (or planning to soon go-live). With the default Elasticsearch settings if you’re not getting the desired performance, here are some things you could look into:

1: Memory Allocation

We need faster and relevant search results. So, memory and disk I/O play a very important role here.

It is advisable to have 64 GB RAM with SSD storage. But one can have less memory and faster HDD as well in trade with workload and more data nodes.

Elasticsearch run on java processes so it is very important to set right amount of JVM to data node. It is advisable to set little less than 50% of RAM to JVM heap. Example: If you have 64 GB RAM on elasticsearch data node box, it is advisable to set ~30 GB and not more than 32 GB.

You can set this in Xms and Xmx in jvm.option file like

–Xms30g

–Xmx30g

You can also set heap size memory into environment variables by setting up ES_JAVA_OPTS parameters. But make sure you comment –Xms , -Xms in jvm.option if you set env. variables.

But, we have 64 GB memory on box and we have allocated ~30 GB to Elasticsearch which is going to be used by services alone. So why don’t we allocate entire 64GB to Elasticsearch? This is a very common question I encounter.

Other than Elasticsearch, there is another user of this heap, Lucene:

Lucene is designed to leverage the underlying OS for caching in-memory data structures. Lucene segments are stored in individual files (we will be circling on this in point no. 5). Because segments are immutable, these files never change. This makes them very cache friendly, and the underlying OS will happily keep hot segments resident in memory for faster access. These segments include both the inverted index (for fulltext search) and doc values (for aggregations).

Lucene’s performance relies on this interaction with the OS. But if you give all the available memory to Elasticsearch’s heap, there won’t be any left for Lucene. This can seriously impact the performance especially your search performance. Hence, we balance it out.

Try to keep an eye on memory usage by free –m and observe swap used parameter.

2: Thread pool

Elasticsearch nodes have various thread pools like write, search, snapshot, get etc. in order to manage memory consumption which is allocated to JVM heap. Each thread pool will have a number of allocated threads which in turn is available for a given task to perform for that section of thread pool. Each pool has an allocated number of thread ‘SIZE’ and its ‘queue size’.

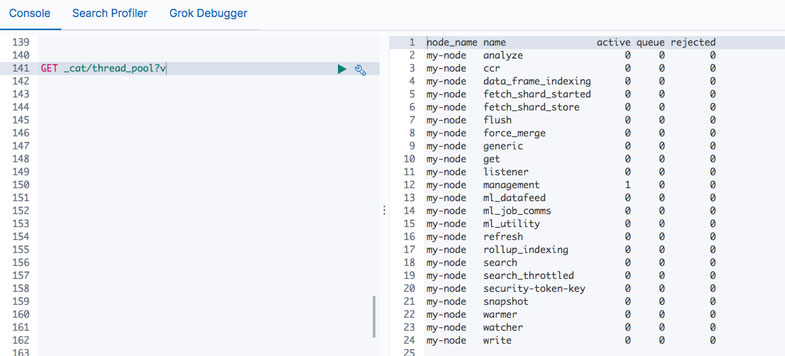

The default setting of thread pools is most of the time perfect for many type of workloads and you can see this thread pool performance in the below query. But if you would like to change (this is an advanced setting and not recommended), you can see the queue and rejected columns in the below query. If a particular thread pool is showing queue non 0 value then it is a sign of warning for that task (pool) and if it is rejected then it is a sign of take action for that task (activity). Remember, if you change any thread pool settings, will be impacting other tasks (pool) as well.

3: Swapping

As mentioned in point no. 1, we need to allocate 50% of RAM to JVM for Elasticsearch and rest of it is utilized by Lucene on the Elasticsearch dedicated box. But most of the OS is memory hungry and it will try to swap as much as cache and swap out unused application memory. This may have swapped the JVM pages as well out of the disk.

Swapping is bad and that’s why it’s critical to keep eye on Swap used with free –m. But you can disable swapping in order to reduce impact on GC (Garbage Collection – it is an important service which helps clean up blocks from memory to make room for your young block).

You can set

# Sudo swapoff –a

on linux box which doesn’t require restart.

To disable it permanently, you will need to edit the /etc/fstab file and comment out any lines that contain the word swap.

You can set memory lock parameter in elasticsearch.yml. Default true.

bootstrap.memory_lock: true

You need to restart the Elasticsearch service in order to enable this.

4: Shards Size

How many shards do you need for your data which is stored in Elasticsearch index? The answer is, it depends! Shards is a unit of Index which stores your actual data on distributed nodes. It is observed that one shard is good to store around 30 GB – 50 GB (I would prefer to give a range of around 20 GB – 35 GB) of data and you can scale your index accordingly. Assuming that you have 64 GB RAM on each data node with a good disk I/O and adequate CPU.

Knowing your Index sizing works better for a fixed data, and then is becomes helpful to set number_of_shards parameter. If your use case is for business analytics or enterprise search where the data comes from fixed sources like RDBMS, files etc. and you know your daily/monthly/yearly data ingestion along with your search queries then it is easy to set shards. If you know that your index size is going to grow around 200-250 GB per month and every month is archival, then you can set around 5 – 6 shards per index.

But if you are not sure about your ingestion rate and search rate, then your task to setup number of shards is tough. Especially if you have use cases like centralize logging for different services and apps or infra monitoring using ELK and you are using different beats (like metricbeat, filebeat, heartbeat etc). When your index gets created daily, then it is difficult to setup the number of shards.

Based on my experience, have observed that if you are creating daily indexes for these beats, then your data is around a few GB (2-4 GB max) per day /per index. In this case, your default setting of number_of_shards: 5 doesn’t work. You might need to consider to change to 1 (instead of 5). Because the daily data will easily fit into 1 shard and it is easy for Elasticsearch to write and read this small amount of data into 1 shard instead of many.

bootstrap.memory_lock: true

In the recent version of Elasticsearch, now default number_of_shards is 1.

When you have use cases like enterprise search or site search where number of search requests are high (I would say more than 500 – 1000 search request / sec – depending upon use cases) then you might need to consider more number of replica shards. This will help to serve read data / search request from these read replicas.

If you are setting up new clusters or index, then it is easy to setup number_of_replicas and number_of_shard at index level but adding number_of_shards and number_of_replicas. You can set this in beats or logstash where’re you creating the index or add in index templates.

But if you already have index and now are increasing/decreasing your shard allocation then you need to do reindex using _reindex API.

5: Segment size

In point no. 4, we saw how to allocate the number of shards for improving indexing performance. As per experience, especially with implementing large clusters with daily index creation, I’ve seen that there might be a possibility to improve more performance at index ‘segment’ level as well.

‘Segment’ is lucene inverted index J. You may be confused, I know. The term “Index” in Elasticsearch is like a RDBMS database where the segment is your actual index on disk in terms of RDBMS language.

“Segment” basically stores copies of real documents in inverted index form and it does this at every “commit” or “refresh interval “or “full buffer”. Default refresh interval is 1s.

The more the segments on disk, the longer each search takes. So behind the scene, lucene does segment merge process for those segments (from each index) which are similar in size. So if there are 2 segments of similar size, lucene merges these 2 segments and creates a new 3rd segment. After the merge process, old segments get dropped. This process is repeated.

Once the data gets committed / flushed into segments, it is available for search. So if you are on default refresh setting i.e 1s then your data will be available after 1s. But due to the many segments on disk and repeated merge processes, there is a likely chance of dropping your search performance. If you really do not want the data to get available for every 1sec that just got ingested and okay to wait for few more seconds in trade to improve your search performance by reducing the number of segments on disk (and less merge every sec), then I would recommend to set index.refresh_interval to 30s instead of default 1s. You can set this parameter at index level.

I would also recommend to change the index buffer memory to hold more data into index buffer if you are changing refresh interval from default 10 % to 20 %. This will give more room for indexing in buffer. You can set this indices.memory.index_buffer_size: 20% on each node level.

I will also be throwing light on parameters to be considered in order to improve overall elasticsearch cluster performance, in the part 2 of this article. Stay tuned for the next issue!