The incident did not begin with a system outage.

It began with a missing file.

A routine SFTP transfer—one that had worked reliably for years—quietly failed. There was no alert, no retry, no immediate indication that anything had gone wrong. A password had expired. A script executed exactly as written. A downstream system waited for data that never arrived.

By the time the issue surfaced, a time-bound operational workflow was already compromised.

That moment forced a question many enterprises eventually confront, often too late: are we still treating file and data transfer as a peripheral concern, or as a foundational part of system reliability?

For years, SFTP combined with scripts has been the default mechanism for moving files and datasets between enterprise systems. It is familiar, simple on paper, and easy to deploy. But at scale-across multiple applications, environments, operational windows, and regulatory boundaries-it becomes fragile in ways that are hard to detect until they matter most.

This article describes how we stepped back from that fragility and rebuilt enterprise file and data transfer around a centralized Kafka-based messaging platform-designed for predictability, auditability, and operational discipline for a large BFSI client.

Why SFTP Breaks Down at Enterprise Scale

SFTP itself is not the problem. The problem is how it gets used in real enterprise environments.

Over time, most organizations accumulate:

- Dozens or hundreds of scripts owned by different teams

- Credentials embedded in cron jobs and configuration files

- Implicit operational assumptions that are rarely documented

- Silent failures that surface only through downstream impact

At small scale, this works. At enterprise scale, it creates risk.

There is no native concept of end-to-end integrity validation. No consistent retry semantics. No centralized visibility. DC–DR behavior is often an afterthought, handled manually during incidents. And critically, failures tend to be silent, not explicit.

What looks like simplicity slowly turns into operational debt.

We realized that continuing to patch this model would only compound the problem. The question was no longer how to improve SFTP, but whether SFTP should remain the backbone of file and data movement at all.

Defining the Objective Before Choosing Technology

Before introducing any new technology, we aligned on a clear objective.

The goal was not to replace SFTP with another file transfer protocol.

The goal was to:

- Eliminate operational fragility caused by scattered scripts and credentials

- Introduce a centralized, controlled mechanism for file and data movement

- Ensure predictable behavior during time-bound and critical workflows

- Make the system observable, auditable, and resilient by design

This clarity shaped every architectural decision that followed. We were not optimizing for novelty. We were optimizing for trust.

Setting a Disciplined Scope

One of the most important decisions we made was to keep the scope sharply defined.

The scope included:

- Migrating existing SFTP-based file and data transfers to a centralized messaging platform

- Building Java-based source and sink plugins for applications

- Supporting controlled retries and contingency workflows

- Treating cluster setup, monitoring, and patching as part of the platform—not post-delivery concerns

What we deliberately avoided was turning this into a general-purpose integration platform. The system was designed for file and data transfer with clear operational boundaries, not open-ended data processing.

This discipline prevented scope creep and helped the platform remain predictable in production.

Technology Choices and Platform Design

We designed the solution around application-embedded Java plugins, compatible with JDK 8 and above and independent of the underlying operating system. This allowed the platform to integrate cleanly across heterogeneous application environments.

At the core sits a centralized Kafka platform, implemented using Confluent Kafka running in KRaft mode. Removing ZooKeeper simplified the control plane and aligned the platform with modern Kafka operational practices.

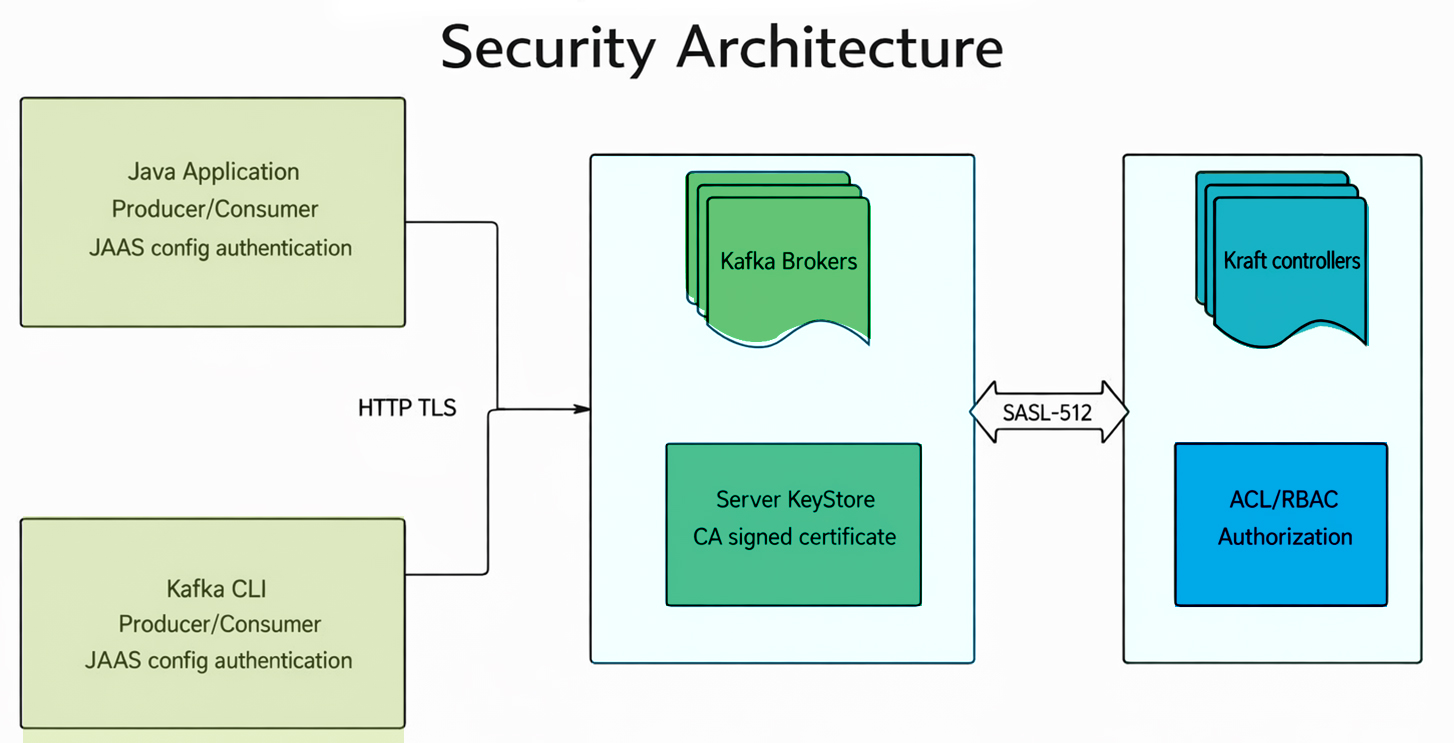

Security was treated as foundational, not additive:

- All communication is encrypted using TLS

- Client authentication is handled via JAAS

- Authorization is enforced through ACLs and role-based access control

- Artifacts are packaged as JARs and distributed in a controlled manner

- Infrastructure provisioning and configuration are automated using Ansible

Monitoring and operational visibility are provided through the ELK stack, ensuring that platform behavior is observable in real time.

These choices reflect a core principle: a platform is only as reliable as its ability to be operated under pressure.

How the Platform Works End to End

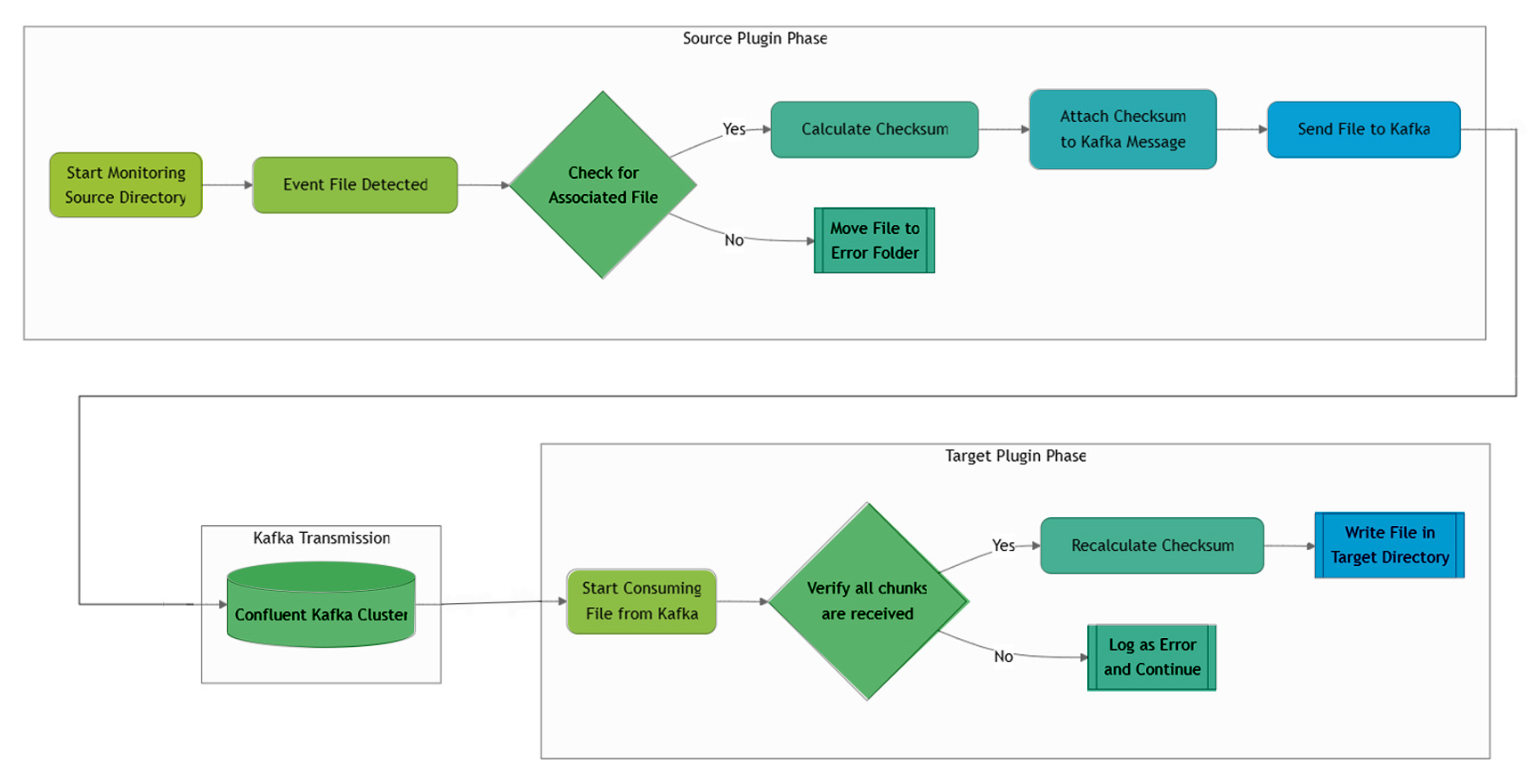

Source-Side Processing

On the source side, the plugin continuously monitors configured directories.

When a trigger file is detected:

- The plugin checks for the presence of the associated data file

- If the data file is missing, the event is moved to an error directory

- If the file is present, a checksum is calculated

- The file or data payload is sent to Kafka in chunks, along with metadata and checksum information

This ensures that incomplete or inconsistent states are explicitly handled rather than silently ignored.

Target-Side Processing

On the target side, the sink plugin consumes messages from Kafka and:

- Verifies that all chunks have been received

- Logs and flags missing chunks as errors

- Recalculates and validates the checksum

- Writes the file or data to the target directory only after integrity is confirmed

This flow ensures end-to-end data integrity while allowing the system to absorb transient failures without corrupting downstream state.

CMP High-Level End-to-End Flow

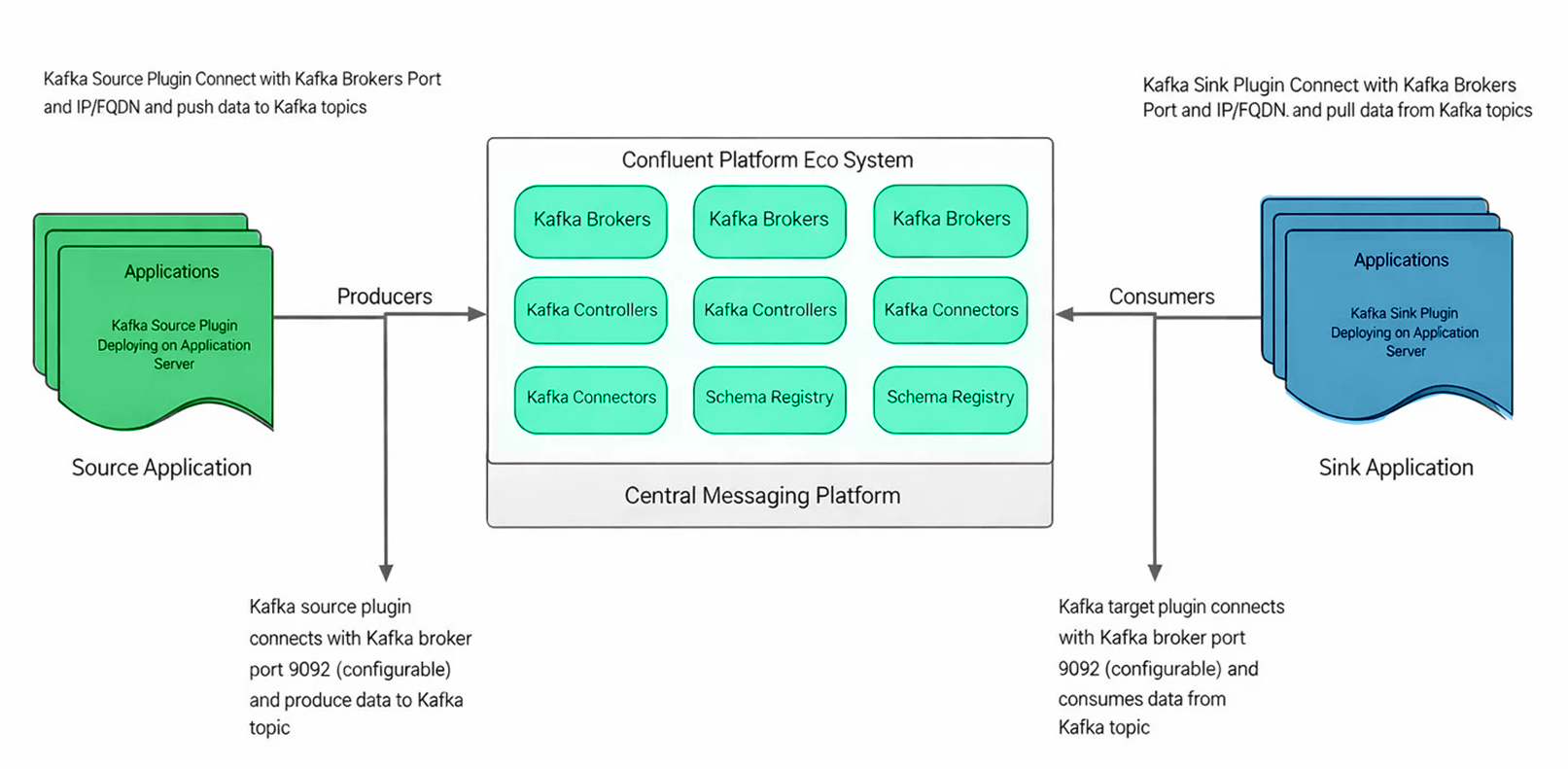

Architecture and Application Integration Model

We deliberately chose an application-embedded plugin model.

Source applications run Kafka source plugins that produce file and data streams into centralized topics. Sink applications run corresponding Kafka sink plugins that consume from those topics.

The centralized messaging platform includes:

- Kafka brokers

- KRaft controllers

- Connectors

- Schema registry components

This architecture allows multiple applications, servers, and directories to participate in file and data transfer without tight coupling. It provides a scalable, consistent integration model that can evolve without breaking existing workflows.

High-Level CMP Architecture with Application Integration

Security and Access Control Design

Security was treated as a design constraint, not an afterthought.

Client authentication is handled through JAAS configuration. All communication between clients, brokers, and controllers is encrypted using TLS. Authorization is enforced through ACLs and role-based access control, ensuring that applications can access only the topics they are permitted to.

This approach ensures that security posture improves as the platform scales, rather than degrading under operational pressure.

Capabilities Enabled in Production

In live production environments, the platform supports:

- File and data transfer across multiple directories on the same server

- Transfers across multiple servers and applications

- Scenarios where multiple files or datasets are associated with a single trigger file

Importantly, the platform allows coexistence with legacy SFTP consumers. State is maintained so that older workflows can continue functioning alongside streaming-based consumers. Trigger files are preserved, ensuring that existing semantics are not broken during migration.

This made adoption incremental rather than disruptive.

Operational Resilience and Governance

The platform includes configurable retry mechanisms that automatically attempt transfer when dependent services are restored. Contingency scripts are available for controlled manual execution when required.

Integrity checks, audit logs, and configuration validation are built into the system to support governance and compliance requirements. Failures are explicit, visible, and actionable.

What Changed After Implementation

Operationally, the shift was immediate.

Dependence on scattered scripts and manual interventions was significantly reduced. File and data transfer moved from an opaque, fragile mechanism to a single, centralized, observable platform.

More importantly, confidence increased. Delivery behavior became predictable. Failures became diagnosable. The system behaved consistently during critical operational windows.

What This Taught Us in Production

This initiative was not about replacing a protocol.

It was about introducing discipline into how file and data transfer happen across enterprise systems.

Design choices such as trigger files, checksum validation, controlled retries, and constrained scope may seem small in isolation. Together, they create a platform that earns trust in production.

Leading this effort reinforced a simple lesson: reliable platforms are built through careful constraints, not complexity. When those constraints are respected, the system proves itself—quietly, consistently, and when it matters most.